Thingiverse

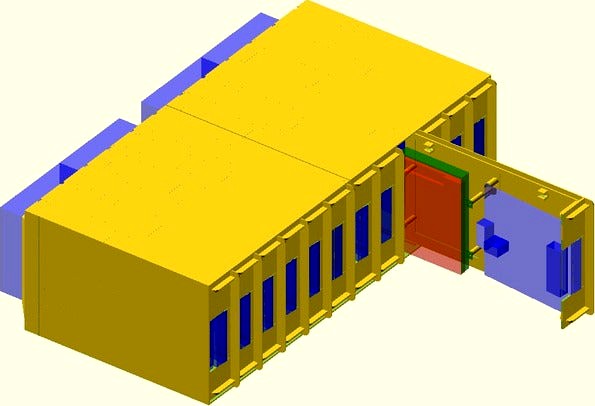

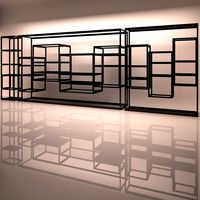

NUC shelf for datacenter rack - 3U - 12 servers by Guillaume_F

by Thingiverse

Last crawled date: 3 years, 1 month ago

The NUC shelf ... or how to hold 12 NUCs in 3 rack units.

Real world

Tested and approved during more than 2 years now with 3x96 NUCs in datacenter (cold corridor) in an HPC cluster configuration and grid computing.

No outage.

High density

2 shelfs on a simple 19 rack tray.

You can fill up a 42U bay with 96-120 servers with only 2 to 4kVA depending on the NUCs.

The leakage current is also deeply reduced with the power sharing (one switching power supply for 12 NUCs)

Cost killer

This shelf is lowering the cost of a node to nearly the price of the NUC + SSD + RAM.

PSU, Fans, ABS material, cables: ~ $30 per NUC.

Making this setup the most efficient, dense, upgradeable and low-cost on the market.

The cost of an Ultimaker 2 is amortized after the completion of the first row (2x6) if we compare it to the cost of existing solutions (x64 intel or amd).

Existing solutions are also often proprietary with proprietary board.

Other low-cost solutions

All low-cost solutions based on NUCs for racks are using the original NUC box.

This approach is not relevant in a datacenter for many reasons:

the cooling is not efficient (warm air will stuck between boxes) and fans are not industrial grade

no power sharing with an industrial PSU: many switching power supply = high leakage current = risks with the differential protective relay = going dark

impossible Cabling separation (Network / Power), a huge mess

Upgradeable

The project is parametric. You can change and adapt the linecard to new generation of NUCs with minimal impact. You only have to reprint the linecard (1 hour on a Ultimaker).

Efficiency and reliability

The 12 NUCs can be powered with one single power supply.

You can even make it redundant with isolated PSU. Our tests show us it is not necessary.

Each shelf (6 Units) is cooled by 2 fans.

The cooling is more efficient than the NUC fan and allows to remove it.

The air flow generated by one fan (industrial and durable) is evenly distributed across 3 NUCs. The line-card (with the higher 2.5 support) is also designed to maximise the effect on the NUC heat sink.

Design and printing

It is a classical and efficient design. Front=cold, Back=warm.

Network cabling is exclusively done at the front of the rack.

Power cabling is exclusively done at the back of the rack.

Parts are designed for fast printing.

You can lower the quality (high speed) without affecting the strength of the parts and the overall structure of the shelf. With an Ultimaker2 (olsson block), we reached a production rate of one linecard per hour.

It is still ABS. Don't expect to sit on a shelf without destroying it.

The line-card is designed to be light and to use the rigidity of the hardware.

Each piece is already prepared for a good printing without warping.

You don't need to add support or brim to it.

When brim was necessary, it was included in the model (one single layer).

Once printed, for those parts, you simply have to remove by hand the thin sheet added around.

Files

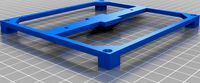

linecard : board/disk holder, sliding in the shelf. 6 per shelf.

shelfback : back of the shelf. 1 per shelf.

shelffront : front of the shelf. The line-cards are entering here. 1 per shelf.

shelftopbottom : top/bottom sheets to glue on the shelf-back/shelf-front. 2 per shelf.

shelfleftright : left/right sheets to glue on the shelf-back/shelf-front. 2 per shelf.

fancase : back fan holder. Screwed on the shelf-back. 1 per shelf.

All other files are dependencies (dim, pin, misc...) or for model evaluation.

You should get all files and launch all.scad to evaluate the consistency of the sources.

To purchase

NUCs (I.e. NUC5i5MYHE which is vPro equipped, necessary for remote console)

Fans. 92x92x25mm. I.e. SUNON MagLev Motor Fan ME92252V2-0000-A99

PSU. 12-24 Vdc with enough power (20w per line-card + fans). I.e. Tracopower TXH 600-124

Nuc internal power connector 2x2 and pins (Molex Micro-Fit 3.0: 43030-0007, 43025-0400)

Electric cable (I.e. Alphawire 5012C SL005)

Crimping tool

NUC

Open the nuc case

extract the board and the 2.5" disk holder

remove the Nuc fan

screw the board and the disk holder on the line-card - simply reuse the screws from the NUC. It is designed for.

Shelf assembly

Take a bottom plate and glue the front and back parts on the designed large lowered sheet.

Glue the top plate the same way.

Glue the left / right plate

Screw the fans to the fan holder part (desktop fan screws)

Screw the fan holder part to the back part (desktop fan screws)

For the glue, the best simple one is diluted ABS in Acetone (closed jar with ABS and acetone during 2-3 hours until getting a viscous paste).

It provides 80-90% of the strength of printed ABS once cured.

This mixture is highly sticky and fast to dry (10-20 seconds).

It is instantly consolidating and merging the parts, like a weld.

Power distribution

The power cable has a crimped Molex Micro-Fit 3.0 on one side (NUC internal connector). The cable is fixed in the two line-card cable holders and goes throw the fan case. There is one large hole for each line-card.

You can slide one line-card in production without shutting down anything. The power cable can slip in and slip out the back hole.

These power cables (per 6) can be soldered on a simple 0.75mm power cable to be properly attached to the PSU (we used U shape terminal connector here).

PSU

It can be hold on the back of the 19 rack. I provide an example in the psu.scad file which fits the tracopower PSU. The two identical pieces are screwed on the PSU and inserted in a simple 19" 1U front plate (installed at the back of the 19" rack).

The third piece is a holder to tight the power cables going to the shelf.

Ecological footprint

NUCs are small (far less components than server boards) and highlly power efficient.

This shelf is ultra-light manufacturing with minimal transport footprint.

The parts are upgradeable and can be fully recycled to make new filament and new servers.

When a motherboard is changed/upgraded, it can go back to its original Intel box and start a second life as a desktop or a HTPC.

Real world

Tested and approved during more than 2 years now with 3x96 NUCs in datacenter (cold corridor) in an HPC cluster configuration and grid computing.

No outage.

High density

2 shelfs on a simple 19 rack tray.

You can fill up a 42U bay with 96-120 servers with only 2 to 4kVA depending on the NUCs.

The leakage current is also deeply reduced with the power sharing (one switching power supply for 12 NUCs)

Cost killer

This shelf is lowering the cost of a node to nearly the price of the NUC + SSD + RAM.

PSU, Fans, ABS material, cables: ~ $30 per NUC.

Making this setup the most efficient, dense, upgradeable and low-cost on the market.

The cost of an Ultimaker 2 is amortized after the completion of the first row (2x6) if we compare it to the cost of existing solutions (x64 intel or amd).

Existing solutions are also often proprietary with proprietary board.

Other low-cost solutions

All low-cost solutions based on NUCs for racks are using the original NUC box.

This approach is not relevant in a datacenter for many reasons:

the cooling is not efficient (warm air will stuck between boxes) and fans are not industrial grade

no power sharing with an industrial PSU: many switching power supply = high leakage current = risks with the differential protective relay = going dark

impossible Cabling separation (Network / Power), a huge mess

Upgradeable

The project is parametric. You can change and adapt the linecard to new generation of NUCs with minimal impact. You only have to reprint the linecard (1 hour on a Ultimaker).

Efficiency and reliability

The 12 NUCs can be powered with one single power supply.

You can even make it redundant with isolated PSU. Our tests show us it is not necessary.

Each shelf (6 Units) is cooled by 2 fans.

The cooling is more efficient than the NUC fan and allows to remove it.

The air flow generated by one fan (industrial and durable) is evenly distributed across 3 NUCs. The line-card (with the higher 2.5 support) is also designed to maximise the effect on the NUC heat sink.

Design and printing

It is a classical and efficient design. Front=cold, Back=warm.

Network cabling is exclusively done at the front of the rack.

Power cabling is exclusively done at the back of the rack.

Parts are designed for fast printing.

You can lower the quality (high speed) without affecting the strength of the parts and the overall structure of the shelf. With an Ultimaker2 (olsson block), we reached a production rate of one linecard per hour.

It is still ABS. Don't expect to sit on a shelf without destroying it.

The line-card is designed to be light and to use the rigidity of the hardware.

Each piece is already prepared for a good printing without warping.

You don't need to add support or brim to it.

When brim was necessary, it was included in the model (one single layer).

Once printed, for those parts, you simply have to remove by hand the thin sheet added around.

Files

linecard : board/disk holder, sliding in the shelf. 6 per shelf.

shelfback : back of the shelf. 1 per shelf.

shelffront : front of the shelf. The line-cards are entering here. 1 per shelf.

shelftopbottom : top/bottom sheets to glue on the shelf-back/shelf-front. 2 per shelf.

shelfleftright : left/right sheets to glue on the shelf-back/shelf-front. 2 per shelf.

fancase : back fan holder. Screwed on the shelf-back. 1 per shelf.

All other files are dependencies (dim, pin, misc...) or for model evaluation.

You should get all files and launch all.scad to evaluate the consistency of the sources.

To purchase

NUCs (I.e. NUC5i5MYHE which is vPro equipped, necessary for remote console)

Fans. 92x92x25mm. I.e. SUNON MagLev Motor Fan ME92252V2-0000-A99

PSU. 12-24 Vdc with enough power (20w per line-card + fans). I.e. Tracopower TXH 600-124

Nuc internal power connector 2x2 and pins (Molex Micro-Fit 3.0: 43030-0007, 43025-0400)

Electric cable (I.e. Alphawire 5012C SL005)

Crimping tool

NUC

Open the nuc case

extract the board and the 2.5" disk holder

remove the Nuc fan

screw the board and the disk holder on the line-card - simply reuse the screws from the NUC. It is designed for.

Shelf assembly

Take a bottom plate and glue the front and back parts on the designed large lowered sheet.

Glue the top plate the same way.

Glue the left / right plate

Screw the fans to the fan holder part (desktop fan screws)

Screw the fan holder part to the back part (desktop fan screws)

For the glue, the best simple one is diluted ABS in Acetone (closed jar with ABS and acetone during 2-3 hours until getting a viscous paste).

It provides 80-90% of the strength of printed ABS once cured.

This mixture is highly sticky and fast to dry (10-20 seconds).

It is instantly consolidating and merging the parts, like a weld.

Power distribution

The power cable has a crimped Molex Micro-Fit 3.0 on one side (NUC internal connector). The cable is fixed in the two line-card cable holders and goes throw the fan case. There is one large hole for each line-card.

You can slide one line-card in production without shutting down anything. The power cable can slip in and slip out the back hole.

These power cables (per 6) can be soldered on a simple 0.75mm power cable to be properly attached to the PSU (we used U shape terminal connector here).

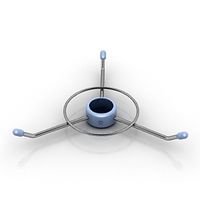

PSU

It can be hold on the back of the 19 rack. I provide an example in the psu.scad file which fits the tracopower PSU. The two identical pieces are screwed on the PSU and inserted in a simple 19" 1U front plate (installed at the back of the 19" rack).

The third piece is a holder to tight the power cables going to the shelf.

Ecological footprint

NUCs are small (far less components than server boards) and highlly power efficient.

This shelf is ultra-light manufacturing with minimal transport footprint.

The parts are upgradeable and can be fully recycled to make new filament and new servers.

When a motherboard is changed/upgraded, it can go back to its original Intel box and start a second life as a desktop or a HTPC.

Similar models

cg_trader

$13

Power strip rack

...s. server rack power strip buttons fuse correct dimensions lights screws electronics computer power strip rack server server rack

cg_trader

$50

Server rack

...erver rack data datacenter power network switch strip media computer electronics power strip power switch rack server server rack

cg_trader

$10

Server Rack B

...r rack b

cg trader

3d server rack b server rack data datacenter power, available in max, obj, fbx, ready for 3d animation and ot

cg_trader

$23

Rack server unit

...e if you have any question. computer server rack unit computing datacenter computer equipment electronics rack server server rack

thingiverse

free

Cable Holder - USB and Power Cable by ProjectB2

...sides i.e. usb. insert between wall and desk to hold cables in place. can glue on additional spacer if desk is too far from wall.

3dwarehouse

free

1RU Rack Shelf for 3 x Intel NUC

...1ru rack shelf for 3 x intel nuc

3dwarehouse

1ru rack shelf for 3 x intel nuc micro pcs with front mounted hdmi extensions

thingiverse

free

Cover for dell server power by Petsku

...hingiverse

cover for server power. see image what power i use. use 20mm screws to secure the fan. or just glue it. its 30mm fan.

thingiverse

free

Eryone Thinker S PSU Fan Silencer Shroud

... back with the printed part.

this way the fan is always hold by at least one screw and won't fall into the power supply case.

thingiverse

free

![Power Cords Holder [Ultimaker] by thingster](/t/9035141.jpg)

Power Cords Holder [Ultimaker] by thingster

...) one of a second psu. the holder is meant to be mounted on the back panel of the machine.

additional holder can be found here.

thingiverse

free

Cable Holder by AleMazza

...able holder by alemazza

thingiverse

cable holder: printed in abs, assembled with abs glue (1 part of abs and 2 parts of acetone)

Datacenter

turbosquid

$25

Datacenter 3D

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

3d_export

$15

data center server

...data center server 3dexport this is a datacenter server. this model created in blender & rendered in...

thingiverse

free

ESP32 nano Datacenter / appliance by smily77

... for external connection of a nrf24 or lora module, is provided.

all parts stick together and hold. no glue or screws are needed.

thingiverse

free

CableJig-2 by streamsource

...by streamsource thingiverse designed to organize bundled cables during datacenter headend or long facility runs. up to 9 cat6,...

thingiverse

free

cube farm - raised floor by hintss

...by hintss thingiverse raised floor tiles are used in datacenter and such, and it allow them to run air...

thingiverse

free

Arduino Nano Temp Sensor Case by Cyberlink

...built to be a temperature/humidity sensor for a small datacenter home office, or anywhere you need to monitor the...

thingiverse

free

Enterprise Rack-mount Server Block Puzzle for Kids (CNC Project, WIP) by nskalsky

...are never too young to build a modern enterprise datacenter work in progress. intended to be milled on a...

thingiverse

free

10 slot stackable dimm tray by Seneram

...without issues, we have stacks of 10 at our datacenter fully filled with heatsinked hp memories. has a cutout...

thingiverse

free

Nvidia GPU Fan Mount K80 / M40 by nitrag

...by nitrag thingiverse this blower style fan cool you datacenter maxwell nvidia gpu (k80/m40/k40). cooling is excellent and you...

3U

3ddd

$1

Favourite 1403-3U

...favourite 1403-3u

3ddd

favourite

модель потолочного светильника 1403-3u. в архиве файлы в 2011 и 2014 версиях и obj-файл.

3ddd

$1

Люстра Favourite

...люстра favourite 3ddd favourite , fioraia код товара 1372-3u производитель: favourite коллекция: fioraia стиль: флористика высота, мм: 185...

3d_sky

$8

Favourite 1403-3U

...favourite 1403-3u

3dsky

favourite

model downlight 1403-3u. the archive files in 2011 and 2014 versions and obj-file.

thingiverse

free

Modular Drawer 3U by Cheo7811

...y cheo7811

thingiverse

individual .stl files in 3u size of marcelbichon modular drawer.https://www.thingiverse.com/thing:3848908

thingiverse

free

Merrow MG-3U Plate

...merrow mg-3u plate

thingiverse

still a wip

3dfindit

free

RR-3U-3F-72G

...rr-3u-3f-72g

3dfind.it

catalog: usbfirewire

3dfindit

free

RR-3U-3F-12G

...rr-3u-3f-12g

3dfind.it

catalog: usbfirewire

3dfindit

free

RR-3U-3F-05G

...rr-3u-3f-05g

3dfind.it

catalog: usbfirewire

3dfindit

free

RR-3U-3F-24G

...rr-3u-3f-24g

3dfind.it

catalog: usbfirewire

3dfindit

free

RR-3U-3S-24G

...rr-3u-3s-24g

3dfind.it

catalog: usbfirewire

Nuc

thingiverse

free

nuc top feeder

...nuc top feeder

thingiverse

top feeder for a nuc bee hive

thingiverse

free

Intel - NUC - Mountingplate by kevinvanlieshout

...intel - nuc - mountingplate by kevinvanlieshout

thingiverse

this is a mountingplate for the intel nuc pc's.

thingiverse

free

Nuc gate by rzeszot

...nuc gate by rzeszot

thingiverse

...

thingiverse

free

mini mating nuc supper

...verse but it was a little to big and the lid from my polly nuc wouldn't fit on it, so i remade it from scratch in fusion 360,

thingiverse

free

intel nuc box drawer by jharshberger

...intel nuc box drawer by jharshberger

thingiverse

turn nuc boxes into drawers.

thingiverse

free

Mating nuc by Ictys

.... so i made the whole thing. i have not test printed it yet, and i know it need som fix. but feel ree to remix.

step-fil included

thingiverse

free

Intel NUC VESA mount by skutt

...adapter for mounting intel nuc on monitors with vesa mount 75mm or 100mm.

use m3 screws to attach the nuc, and m4 for vesa mount.

thingiverse

free

Cooling system for NUC by Tchoum

... nuc pied.stl

2x ventilation nuc pied.stl with a reverse effect.

put in place the fan.

screw if necessary.

insert the feet.

thingiverse

free

Intel NUC DIN clip by kaccer

...intel nuc din clip by kaccer

thingiverse

din clip design for intel nuc mini computer.

thingiverse

free

NUC under desk mount by bruckj

...l nuc under a desk. uses #6 screws. dimensioned to leave the feet on the nuc and compress them slightly for a snug, secure fit.

Guillaume

3ddd

$1

Bronco stool by Guillaume Delvigne

... табурет

барный стул от известного французского дизайнера guillaume delvigne. выполнен в размерах. тексуры присутствуют.

3ddd

$1

Deneb by Guillaume Delvigne

...авки

привет! выкладываю вызочку из последней работы, буду рада, если пригодится в интерьерах, в архиве присутствует файл 2011 :)

design_connected

$11

Lundi

...roset lundi computer generated 3d model. designed by bardet, guillaume ...

design_connected

$11

F27 Aerostat

...f27 aerostat computer generated 3d model. designed by delvigne, guillaume ...

3d_export

$19

dexter table lamp by monoqi

...the dexter table lamp for monoqi and redcartel by guillaume santraine.<br>bold colours meet strong materials in the dexter table...

thingiverse

free

The Guillaume by edinky

...the guillaume by edinky

thingiverse

a drinks holder designed to be clipped on the strap of a vr headset. straw not included.

3d_sky

$8

Bronco stool by Guillaume Delvigne

...e delvigne

3dsky

stool bar

bar stool from the renowned french designer guillaume delvigne. executed in size. teksury are present.

thingiverse

free

Guillaume keychain by unix1

...guillaume keychain by unix1

thingiverse

it's a simple keychain create since fusion 360!

create by unix1

3dbaza

$3

Guillaume Tufted Square Standard Ottoman (123077)

....40.03. file formats fbx, obj, stl. guillaume tufted square standard ottoman. size:72x72xh40 cm. polygons: 41216. vertices: 42873

3d_sky

$8

Deneb by Guillaume Delvigne

...w pins

hi! spread vyzochku of last job, i'd be happy if useful in the interior, there is a file in the archive in 2011 :)

Servers

archibase_planet

free

Server

...

archibase planet

server pc workstation it equipment

server it-pc n270815 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

3d_export

$5

Server

...server

3dexport

3d_export

$10

Server cabinet

...server cabinet

3dexport

server cabinet built on solidworks software.

turbosquid

$50

Server

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

turbosquid

$36

Server

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

turbosquid

$15

Server A

... model server a for download as blend, dae, fbx, obj, and stl on turbosquid: 3d models for games, architecture, videos. (1694729)

turbosquid

$2

Server

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

turbosquid

free

server

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

turbosquid

free

SERVER

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

3d_export

$15

server a

...ance painter 2017 and rendered in bledner using eevee. model has two sets of uvs: one for texturing and one for lightmap backing.

Rack

archibase_planet

free

Rack

...ack

archibase planet

rack paper rack rack for paper

rack 6 office n060914 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...ack

archibase planet

rack paper rack rack for paper

rack 3 office n060914 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...ack

archibase planet

rack paper rack rack for paper

rack 5 office n060914 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...ack

archibase planet

rack paper rack rack for paper

rack 7 office n060914 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...for magazines rack for notebooks notebooks notebook

rack notebook n120614 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...rack

archibase planet

rack umbrella umbrella rack

rack n180811 - 3d model (*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...rack

archibase planet

rack book rack stand

rack - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...rack

archibase planet

rack rack for glasses

rack 1 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Rack

...rack

archibase planet

rack rack for glasses

rack 2 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

3d_export

free

rack

...rack

3dexport

rack

Shelf

3d_ocean

$6

Shelf

...shelf

3docean

furniture shelf

a lowpoly shelf .

turbosquid

$7

Shelf

...helf, bookshelf,cafe shelf,decor, for download as max and ige on turbosquid: 3d models for games, architecture, videos. (1427685)

archibase_planet

free

Shelf

...shelf

archibase planet

shelf rack glass shelf

shelf - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Shelf

...shelf

archibase planet

shelf rack glass shelf

shelf - 3d model (*.gsm+*.3ds) for interior 3d visualization.

3d_ocean

$3

Shelf

...shelf

3docean

shelf

a high quality shelf with high resolution texture

3d_export

$5

shelf

...shelf

3dexport

shelf

3d_export

$5

Shelf

...shelf

3dexport

shelf

archibase_planet

free

Shelf

...shelf

archibase planet

shelf shelving glass shelf

shelf 1 - 3d model (*.gsm+*.3ds) for interior 3d visualization.

archibase_planet

free

Shelf

...shelf

archibase planet

shelfs shelving bookshelf

shelf - 3d model for interior 3d visualization.

archibase_planet

free

Shelf

...shelf

archibase planet

shelf shelving bookcase

shelf - 3d model for interior 3d visualization.

F

3ddd

$1

Торшер Ray F. Ray F. Италия

...торшер ray f. ray f. италия

3ddd

flos

торшер ray f. ray f. италия 1280х360

turbosquid

$100

F-16 F block 62

...oyalty free 3d model f-16 f for download as max, obj, and fbx on turbosquid: 3d models for games, architecture, videos. (1310086)

3d_export

free

f-35

...f-35

3dexport

us fighter f-35

3d_export

$5

f 150

...f 150

3dexport

f 150 formats max 3ds obj stl

3d_export

$60

F-600

...f-600

3dexport

f600 fire truck, f600 truck f-600 of 1968

design_connected

$13

Tatou F

...tatou f

designconnected

tatou f computer generated 3d model. designed by urquiola, patricia.

design_connected

$13

Luxmaster F

...luxmaster f

designconnected

luxmaster f computer generated 3d model. designed by morrison, jasper.

design_connected

$10

Tab F

...tab f

designconnected

tab f computer generated 3d model. designed by osgerby , jay.

turbosquid

$30

F-4 and F-35 egg plane

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

design_connected

$29

Extrasoft F

...extrasoft f

designconnected

living divani extrasoft f computer generated 3d model. designed by lissoni, piero.

12

3ddd

free

декор№12

...декор№12

3ddd

декор№12

turbosquid

$25

12

... available on turbo squid, the world's leading provider of digital 3d models for visualization, films, television, and games.

turbosquid

free

bed 01 12/12/2019

...rbosquid

free 3d model bed_01_12/12/2019 for download as max on turbosquid: 3d models for games, architecture, videos. (1482189)

3d_export

$5

12

...12

3dexport

карабин сайга с рожком и патроном

turbosquid

$24

Chandelier MD 89330-12+12 Osgona

... chandelier md 89330-12+12 osgona for download as max and fbx on turbosquid: 3d models for games, architecture, videos. (1222937)

design_connected

$29

Nuvola 12

...nuvola 12

designconnected

gervasoni nuvola 12 computer generated 3d model. designed by navone, paola.

design_connected

$25

Kilt 12

...kilt 12

designconnected

zanotta kilt 12 computer generated 3d model. designed by progetti, emaf.

design_connected

$11

Domino 12

...domino 12

designconnected

zanotta domino 12 computer generated 3d model. designed by progetti, emaf.

design_connected

$9

Croco 12

...croco 12

designconnected

gervasoni croco 12 computer generated 3d model. designed by navone, paola.

design_connected

$16

X.12

...x.12

designconnected

bernini x.12 chairs computer generated 3d model. designed by franco poli.